Lets see how we can read the csv data [in my case from LFS] and converting the RDD to Dataframe using case class.

Sample Data:-

1,Ram,Sita,Ayodha,32

2,Shiva,Parwati,Kailesh,33

3,Bishnu,Laxmi,Sagar,34

4,Brahma,Swarswati,Brahmanda,35

5,Krishna,Radha,Mathura,36

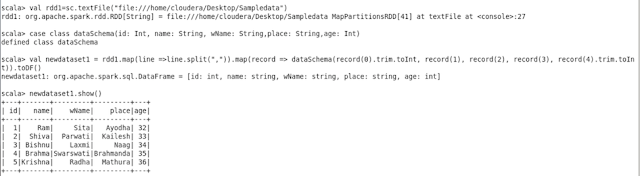

At first lets read the file,

scala> val rdd1=sc.textFile("file:///home/cloudera/Desktop/Sampledata")

[if you want to read from hdfs just add hdfs://hostname:8020/user/cloudera/Sampledata]

lets define the schema for the dataset using case class

scala> case class dataSchema(id: Int, name: String, wName: String,place: String,age: Int)

After successfully defining the case class, lets map the schema to the rdd.

scala> val newdataset1 = rdd1.map(line =>line.split(",")).map(record => dataSchema record(0).trim.toInt, record(1), record(2), record(3), record(4).trim.toInt)).toDF()

Here, we are applying the schema which we created using case class into the RDD rdd1,

scala> newdataset1.show() [it will show you the data]

Screen Shot:-

Also we can register the dataset as table and query accordingly.

lets register dataset to table in spark.

scala> newdataset1.registerTempTable("Newtbl")

The given dataset [newdataset1] is now registered as Newtbl .

lets query the table using sql syntax.

scala> newdataset1.sqlContext.sql("select * from Newtbl where age>33").collect.foreach(println)

This will run the sql query and print the output.. we can do many complex tasks in easy manner.

Thankyou!!!!

Sample Data:-

1,Ram,Sita,Ayodha,32

2,Shiva,Parwati,Kailesh,33

3,Bishnu,Laxmi,Sagar,34

4,Brahma,Swarswati,Brahmanda,35

5,Krishna,Radha,Mathura,36

At first lets read the file,

scala> val rdd1=sc.textFile("file:///home/cloudera/Desktop/Sampledata")

[if you want to read from hdfs just add hdfs://hostname:8020/user/cloudera/Sampledata]

lets define the schema for the dataset using case class

scala> case class dataSchema(id: Int, name: String, wName: String,place: String,age: Int)

After successfully defining the case class, lets map the schema to the rdd.

scala> val newdataset1 = rdd1.map(line =>line.split(",")).map(record => dataSchema record(0).trim.toInt, record(1), record(2), record(3), record(4).trim.toInt)).toDF()

Here, we are applying the schema which we created using case class into the RDD rdd1,

scala> newdataset1.show() [it will show you the data]

Screen Shot:-

Also we can register the dataset as table and query accordingly.

lets register dataset to table in spark.

scala> newdataset1.registerTempTable("Newtbl")

The given dataset [newdataset1] is now registered as Newtbl .

lets query the table using sql syntax.

scala> newdataset1.sqlContext.sql("select * from Newtbl where age>33").collect.foreach(println)

This will run the sql query and print the output.. we can do many complex tasks in easy manner.

Thankyou!!!!